Updated July 5, 2021: Our journal paper describing top results was accepted for publication: D. Hyun, A. Wiacek, S. Goudarzi, S. Rothlübbers, A. Asif, K. Eickel, Y. C. Eldar, J. Huang, M. Mischi, H. Rivaz, D. Sinden, R.J.G. van Sloun, H. Strohm, M. A. L. Bell, Deep Learning for Ultrasound Image Formation: CUBDL Evaluation Framework & Open Datasets, IEEE Transactions on Ultrasonics, Ferroelectrics, and Frequency Control (accepted July 1, 2021) [pdf] While this journal paper only includes top results as initially advertised, all results are available below.

Participants

Four participants submitted networks that achieved the stated goals of Task 1. Their networks and training results are summarized on the CUBDL e-poster presented at IEEE IUS 2020 and in the following conference papers:

Participant A

S. Rothlübbers, H. Strohm, K. Eickel, J. Jenne, V. Kuhlen, D. Sinden, M. Günther, “Improving image quality of single plane wave ultrasound via deep learning based channel compounding,” in Proceedings of the 2020 IEEE International Ultrasonics Symposium, 2020 [pdf]

– submitted to Task 1a

Participant B

S. Goudarzi, A. Asif, and H. Rivaz, “Ultrasound beamforming using MobileNetV2,” in Proceedings of the 2020 IEEE International Ultrasonics Symposium, 2020 [pdf]

– submitted to Task 1a

Participant C

Y. Wang, K. Kempski, J. U. Kang, and M. A. L. Bell, “A conditional adversarial network for single plane wave beamforming,” in Proceedings of the 2020 IEEE International Ultrasonics Symposium, 2020 [pdf]

– submitted to Task 1a

Participant D

Z. Li, A. Wiacek, and M. A. L. Bell, “Beamforming with deep learning from single plane wave RF data,” in Proceedings of the 2020 IEEE International Ultrasonics Symposium, 2020 [pdf]

– submitted to Task 1b

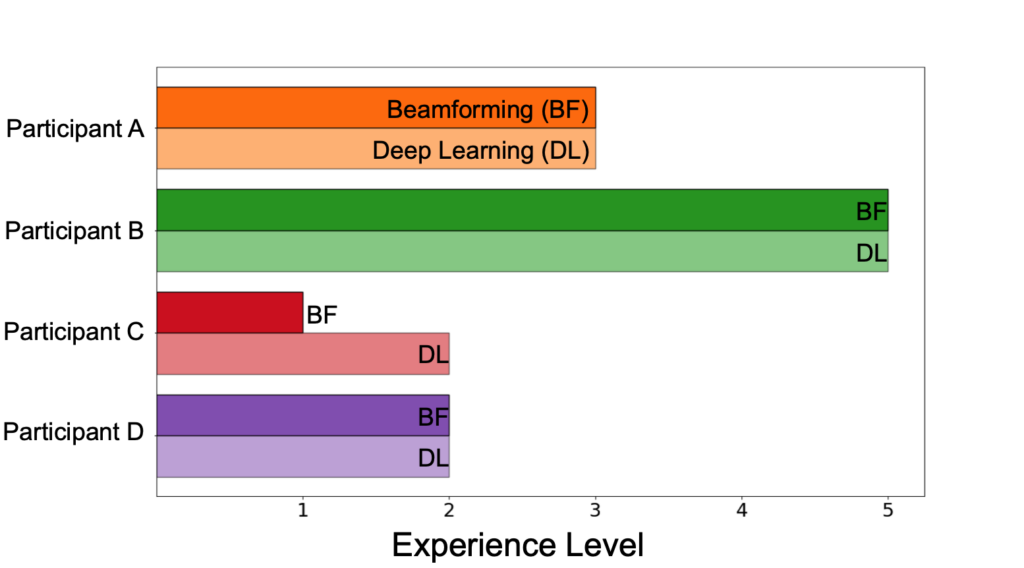

Wide Range of Experience Levels

The first authors of each submission were asked to self-identify their level of experience with beamforming and deep learning prior to participation in the challenge on a scale of 1 to 5, with 1 being novice and 5 being expert. We received the following responses.

Results

Final Scores

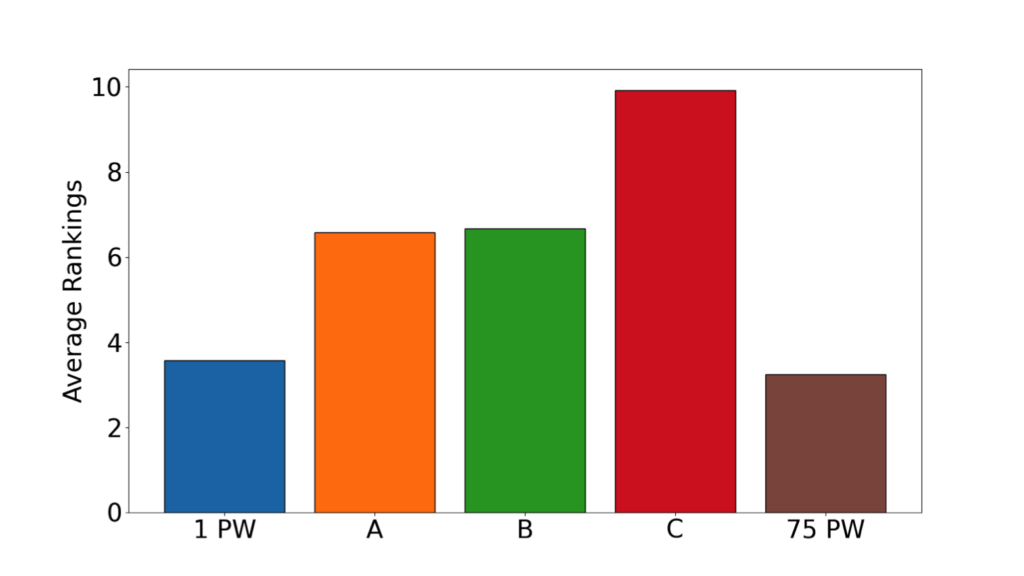

The final scores of the participants in Task 1a were compared to the scores of images obtained with single and multiple plane wave transmissions. Participant D went uncontested in Task 1b and thus did not compete in these rankings.

Challenge Co-Winners

The networks submitted by Participants A & B achieved equivalent scores, based on the guidelines and ranking structure outlined prior to participation. Therefore, the co-winners of the challenge are:

- Sven Rothlübbers et al. from Fraunhofer MEVIS — this submitted network had the least complexity

- Sobhan Goudarzi et al. from Concordia University — this network produced the best overall image quality

Runners-Up

The networks submitted by Participants C & D worked well on open data, but showed evidence of overfitting to the open data when evaluated on the closed CUBDL test data, which highlights the importance of a greater variety and accessibility to open data. These participants were declared the runners-up:

- Yaning Wang et al. from Johns Hopkins University

- Zehua Li et al. from Johns Hopkins University

Final Commendations

Congratulations to all participants! All participants are commended, considering the pandemic and wide range of prior experience.

Additional quantitative details comparing the four participants are available below. These results were presented at the IEEE IUS live Challenge session on Friday, September 11, 2020.

CUBDL_IUS_Presentation_v4Sound Speed Optimization: A Necessity to Achieve the Above Results

The CUBDL organizers solicited datasets to be used for the challenge evaluations described above. The sound speed metadata we received turned out to be very “noisy” as there were conflicts between the speed of sound reported by data contributors on our data intake sheets and the sound speed listed on phantom manufacturer data sheets. Aware that sound speed errors affect overall ultrasound image quality, the CUBDL organizers used the same PyTorch DAS beamformer provided to participants to treat speed of sound as a learnable parameter in efforts to achieve fair evaluations, despite the noted differences. There were substantial visual improvements as a result of this implementation, as shown in the example images below.

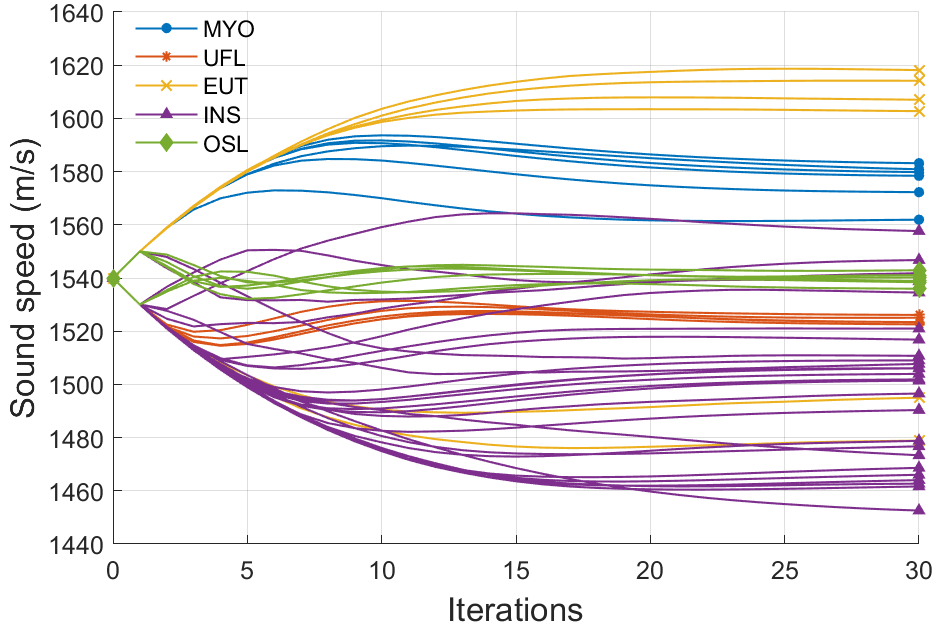

These results were obtained using gradient ascent until speckle brightness was maximized using the Adam optimizer. This sound speed correction approach was applied to all phantoms acquired with plane wave acquisitions, as summarized in the plot below, which starts with the sound speed reported on our data intake sheets and ends with the optimized sound speed for each phantom acquisition. The legend lists our 3-letter short codes for the five institutions that contributed the corresponding data.

These results are further stratified by institution and by phantom model in the plot below, with the black bar indicating the sound speed reported on data sheets from the phantom manufacturers (whenever available). The horizontal line indicates the sound speed reported by data contributors on our intake sheets. All values reported below are available with our released datasets and updated evaluation code.

Summary of CUBDL Outcomes

Our challenge outcomes and contributions to the field include:

- Database of open-access raw ultrasound channel data (simulated, phantom, and in vivo data) acquired with plane wave and focused transmissions, 576 acquisitions total, crowd-sourced from seven ultrasound groups around the world

- Network descriptions and trained network weights from CUBDL winners

- PyTorch DAS beamformer containing multiple components that can be converted to trainable parameters

- Data sheet of phantom sound speeds, including reported sound speeds, manufacturer data sheet sound speeds (where available), and optimized sound speeds identified by the CUBDL organizers using the PyTorch DAS beamformer

- Evaluation code that integrates the four contributions listed above

These outcomes are summarized below with snapshots of the released materials. The integration of these contributions is anticipated to standardize and accelerate research that sits at the intersection of beamforming and deep learning.