Submission Requirements

Update as of April 24, 2020:

We are now accepting PyTorch and TensorFlow models (preferred over ONNX).

Participants are required to submit the following three files:

- The trained model

- For PyTorch, please provide a .py file that creates an instance of the submitted model as an nn.Module subclass, as well as the corresponding state_dict file following the procedure for “Saving & Loading Model for Inference using state_dict” described here: https://pytorch.org/tutorials/beginner/saving_loading_models.html#saving-loading-model-for-inference

- For TensorFlow, please use the SavedModel format to save the entire model as described here: https://www.tensorflow.org/tutorials/keras/save_and_load#savedmodel_format, and submit a zipped folder containing the assets, variables, and saved_model.pb.

- Otherwise, please convert the model to a .onnx model file containing the network structure and trained weights.

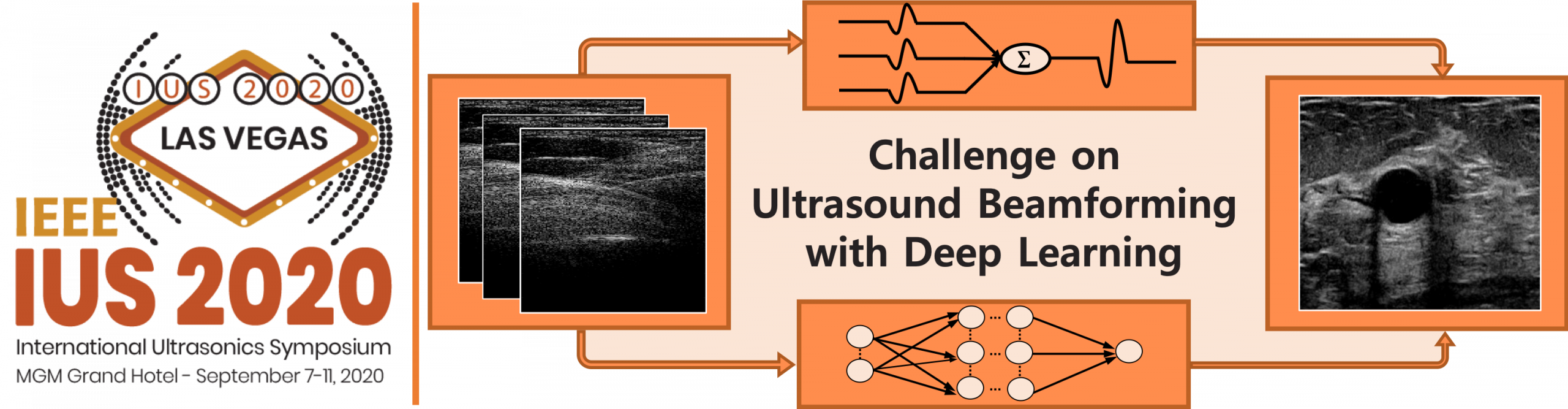

- A diagram of the network architecture and where it fits into the entire beamforming pipeline

- A paper summarizing details of the deep learning network, including the rationale for design choices that are ideally linked to ultrasound imaging physics. Paper submissions should follow IUS 2020 proceedings guidelines.

To be considered as a challenge participant, all three documents noted above are needed. Participants will submit their models for evaluation of the deep learning networks on a closed test dataset to be evaluated and ranked by the organizers. These three documents must be submitted through IEEE DataPort.

CUBDL Evaluation Workflow

To provide challenge participants with a broader overview and transparency regarding the use of submitted files during our evaluation, we present the following workflow:

- Participants train networks in TensorFlow, PyTorch, or any other popular machine learning framework

- Participants submit their model file to the IEEE DataPort website, with format based on training approach (see details above)

- CUBDL organizers download the submitted models and supporting documents (see details above) from IEEE DataPort.

- We will then launch a python script to perform evaluation that will include the following steps:

- Load submitted model

- Load test data

- Perform inference with test data and obtain outputs

- Evaluate metrics of outputs

- Save metrics to file

- Organizers place the metrics into a ranking system

- Ranking will be available to all participants after the submission deadline

- Participants will be notified of acceptance decisions based on the contents of the three required submission documents